type

status

date

slug

summary

tags

category

icon

password

一、安装部署

1. fio安装

在线安装:yum install -y fio

离线安装:

tar -zxvf fio.tar.bz

cd fio

rpm -ivh *

2. libaio引擎安装

安装libaio引擎:

在线安装:yum install -y libaio

离线安装:

tar -zxvf libaio.tar.gz

rpm -ivh ibaio/*

优先选择libaio引擎测试

libaio时Linux下原生异步IO接口,用此引擎测试更加准确。

二、测试环境

系统版本:CentOS 7.6

文件系统:xfs

主机配置:2C 2G

IO引擎:libaio

测试工具:fio-3.7

三、测试目的

用fio磁盘测试工具,调节各个参数的变化,模拟出实际业务状态下磁盘的运行、性能状况;

测试数据在连续长时间读写、bs块大小、目录深度和并发数量变化情况下对磁盘的读写速度磁盘使用率及IOPS等性能影响情况。

runtime: 测试时间长度,可模拟磁盘连续长时间读写

bs: 模拟单次io的块文件大小

iodepth: 模拟IO阻塞情况下磁盘读写性能变化

numjobs: 模拟并发量较高情况下磁盘读写性能变化

四、测试模式

会分别测试各个参数下顺序读、顺序写、随机读、随机写的BW和IOPS情况和磁盘利用率。

五、测试对象

1 云硬盘(普通IO)

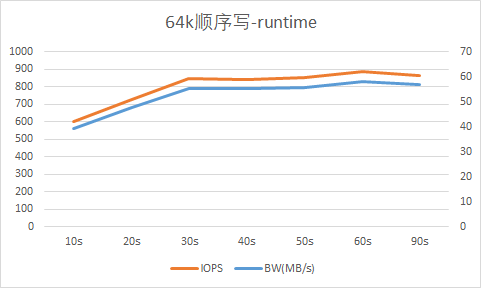

1.1 runtime

测试内容:

测试4k随机读写和64k顺序读写的过程中,不同的runtime所得到的测试结果,观察从多长时间开始可以得到稳定的测试结果。

测试脚本

runtime.fio.conf:

测试命令:

测试结果:

结果包含“IOPS”“BW”等

测试结果统计图:

结论:

在实际测试环境中,适当加长测试时间,查看不同文件bs大小在多长时间读写后IO和BW趋于稳定,总结出IOPS上限及最大磁盘吞吐量,以及面对不同块大小在长时间读写时磁盘占用率的变化。

(具体结论待真实环境测试后总结)

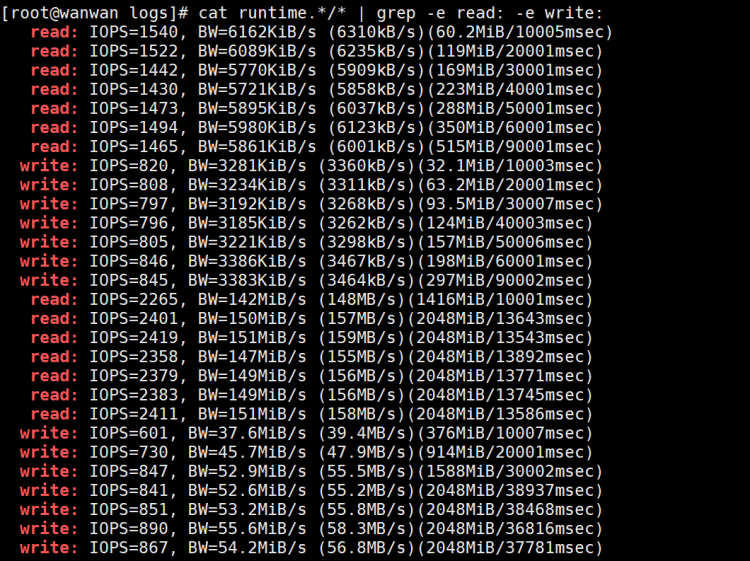

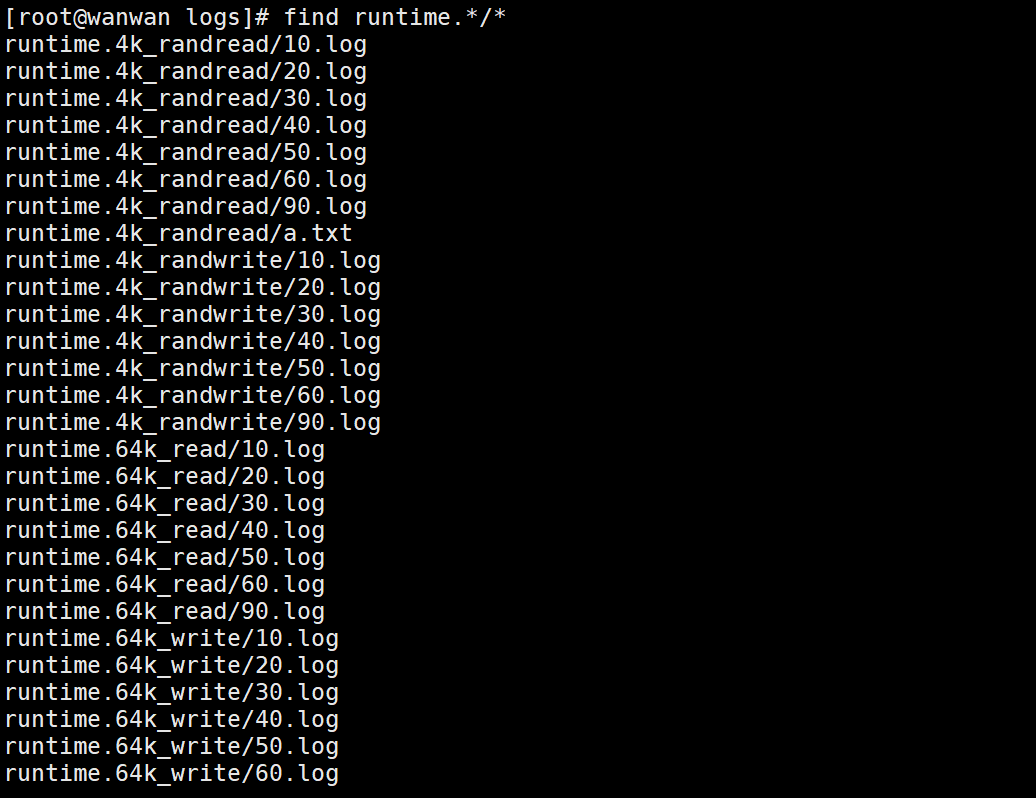

数据取出命令:

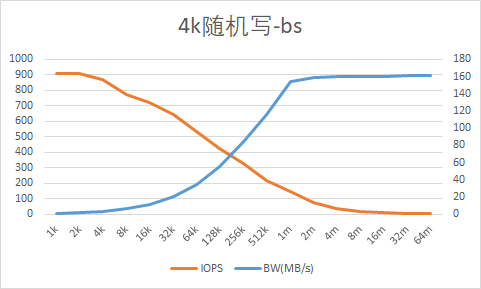

1.2 bs

测试目的:

观察不同块大小情况会对磁盘读写造成什么样的影响。

测试内容:

测试在不同bs 块大小情况下,顺序读写和随机读写的情况。

测试脚本:

测试命令:

数据取出命令:

测试结果:

文件名/bs大小 | IOPS/BW |

bs.randread/16:10:21.1k.log | read: IOPS=1546, BW=1546KiB/s (1584kB/s)(45.3MiB/30006msec) |

bs.randread/16:11:01.2k.log | read: IOPS=1523, BW=3048KiB/s (3121kB/s)(89.3MiB/30001msec) |

bs.randread/16:11:42.4k.log | read: IOPS=1471, BW=5885KiB/s (6026kB/s)(172MiB/30011msec) |

bs.randread/16:12:22.8k.log | read: IOPS=1495, BW=11.7MiB/s (12.2MB/s)(350MiB/30001msec) |

bs.randread/16:13:03.16k.log | read: IOPS=1357, BW=21.2MiB/s (22.2MB/s)(636MiB/30001msec) |

bs.randread/16:13:43.32k.log | read: IOPS=1165, BW=36.4MiB/s (38.2MB/s)(1093MiB/30001msec) |

bs.randread/16:14:24.64k.log | read: IOPS=945, BW=59.1MiB/s (61.9MB/s)(1772MiB/30001msec) |

bs.randread/16:15:04.128k.log | read: IOPS=757, BW=94.7MiB/s (99.3MB/s)(2841MiB/30001msec) |

bs.randread/16:15:45.256k.log | read: IOPS=593, BW=148MiB/s (155MB/s)(4449MiB/30001msec) |

bs.randread/16:16:25.512k.log | read: IOPS=302, BW=151MiB/s (159MB/s)(4536MiB/30001msec) |

bs.randread/16:17:06.1m.log | read: IOPS=151, BW=152MiB/s (159MB/s)(4613MiB/30396msec) |

bs.randread/16:17:46.2m.log | read: IOPS=76, BW=152MiB/s (160MB/s)(4650MiB/30514msec) |

bs.randread/16:18:27.4m.log | read: IOPS=38, BW=152MiB/s (160MB/s)(4652MiB/30520msec) |

bs.randread/16:19:08.8m.log | read: IOPS=19, BW=152MiB/s (160MB/s)(4656MiB/30534msec) |

bs.randread/16:19:49.16m.log | read: IOPS=9, BW=153MiB/s (160MB/s)(4656MiB/30520msec) |

bs.randread/16:20:30.32m.log | read: IOPS=4, BW=153MiB/s (161MB/s)(4672MiB/30506msec) |

bs.randread/16:21:12.64m.log | read: IOPS=2, BW=153MiB/s (161MB/s)(4672MiB/30487msec) |

ㅤ | ㅤ |

bs.randwrite/16:43:40.1k.log | write: IOPS=912, BW=912KiB/s (934kB/s)(26.7MiB/30009msec) |

bs.randwrite/16:44:21.2k.log | write: IOPS=910, BW=1821KiB/s (1865kB/s)(53.3MiB/30001msec) |

bs.randwrite/16:45:01.4k.log | write: IOPS=872, BW=3490KiB/s (3574kB/s)(102MiB/30010msec) |

bs.randwrite/16:45:42.8k.log | write: IOPS=776, BW=6214KiB/s (6363kB/s)(182MiB/30001msec) |

bs.randwrite/16:46:22.16k.log | write: IOPS=723, BW=11.3MiB/s (11.9MB/s)(339MiB/30014msec) |

bs.randwrite/16:47:03.32k.log | write: IOPS=641, BW=20.1MiB/s (21.0MB/s)(602MiB/30001msec) |

bs.randwrite/16:47:43.64k.log | write: IOPS=537, BW=33.6MiB/s (35.2MB/s)(1008MiB/30002msec) |

bs.randwrite/16:48:24.128k.log | write: IOPS=426, BW=53.3MiB/s (55.9MB/s)(1600MiB/30002msec) |

bs.randwrite/16:49:04.256k.log | write: IOPS=326, BW=81.6MiB/s (85.6MB/s)(2449MiB/30001msec) |

bs.randwrite/16:49:45.512k.log | write: IOPS=221, BW=111MiB/s (116MB/s)(3319MiB/30004msec) |

bs.randwrite/16:50:25.1m.log | write: IOPS=147, BW=147MiB/s (154MB/s)(4415MiB/30001msec) |

bs.randwrite/16:51:06.2m.log | write: IOPS=75, BW=152MiB/s (159MB/s)(4550MiB/30006msec) |

bs.randwrite/16:51:46.4m.log | write: IOPS=38, BW=152MiB/s (160MB/s)(4616MiB/30310msec) |

bs.randwrite/16:52:27.8m.log | write: IOPS=19, BW=153MiB/s (160MB/s)(4656MiB/30506msec) |

bs.randwrite/16:53:08.16m.log | write: IOPS=9, BW=152MiB/s (160MB/s)(4656MiB/30550msec) |

bs.randwrite/16:53:49.32m.log | write: IOPS=4, BW=153MiB/s (161MB/s)(4672MiB/30505msec) |

bs.randwrite/16:54:30.64m.log | write: IOPS=2, BW=153MiB/s (161MB/s)(4672MiB/30519msec) |

ㅤ | ㅤ |

bs.read/15:47:21.1k.log | read: IOPS=3315, BW=3316KiB/s (3396kB/s)(97.2MiB/30001msec) |

bs.read/15:48:02.2k.log | read: IOPS=3163, BW=6327KiB/s (6479kB/s)(185MiB/30001msec) |

bs.read/15:48:42.4k.log | read: IOPS=3109, BW=12.1MiB/s (12.7MB/s)(364MiB/30001msec) |

bs.read/15:49:23.8k.log | read: IOPS=3678, BW=28.7MiB/s (30.1MB/s)(862MiB/30001msec) |

bs.read/15:50:03.16k.log | read: IOPS=3643, BW=56.9MiB/s (59.7MB/s)(1708MiB/30001msec) |

bs.read/15:50:44.32k.log | read: IOPS=3036, BW=94.9MiB/s (99.5MB/s)(2847MiB/30001msec) |

bs.read/15:51:24.64k.log | read: IOPS=2301, BW=144MiB/s (151MB/s)(4316MiB/30001msec) |

bs.read/15:52:05.128k.log | read: IOPS=1203, BW=150MiB/s (158MB/s)(4514MiB/30001msec) |

bs.read/15:52:45.256k.log | read: IOPS=608, BW=152MiB/s (159MB/s)(4633MiB/30481msec) |

bs.read/15:53:26.512k.log | read: IOPS=304, BW=152MiB/s (160MB/s)(4650MiB/30511msec) |

bs.read/15:54:07.1m.log | read: IOPS=152, BW=152MiB/s (160MB/s)(4650MiB/30513msec) |

bs.read/15:54:48.2m.log | read: IOPS=76, BW=152MiB/s (160MB/s)(4648MiB/30526msec) |

bs.read/15:55:29.4m.log | read: IOPS=38, BW=153MiB/s (160MB/s)(4652MiB/30490msec) |

bs.read/15:56:10.8m.log | read: IOPS=19, BW=153MiB/s (160MB/s)(4656MiB/30531msec) |

bs.read/15:56:51.16m.log | read: IOPS=9, BW=153MiB/s (160MB/s)(4656MiB/30507msec) |

bs.read/15:57:32.32m.log | read: IOPS=4, BW=153MiB/s (161MB/s)(4672MiB/30515msec) |

bs.read/15:58:13.64m.log | read: IOPS=2, BW=153MiB/s (161MB/s)(4672MiB/30490msec) |

ㅤ | ㅤ |

bs.write/15:33:24.1k.log | write: IOPS=1571, BW=1572KiB/s (1609kB/s)(46.0MiB/30002msec) |

bs.write/15:34:04.2k.log | write: IOPS=1498, BW=2998KiB/s (3070kB/s)(87.8MiB/30001msec) |

bs.write/15:34:45.4k.log | write: IOPS=1451, BW=5805KiB/s (5944kB/s)(170MiB/30001msec) |

bs.write/15:35:25.8k.log | write: IOPS=1350, BW=10.5MiB/s (11.1MB/s)(317MiB/30001msec) |

bs.write/15:36:06.16k.log | write: IOPS=1275, BW=19.9MiB/s (20.9MB/s)(598MiB/30001msec) |

bs.write/15:36:46.32k.log | write: IOPS=1085, BW=33.9MiB/s (35.6MB/s)(1017MiB/30001msec) |

bs.write/15:37:27.64k.log | write: IOPS=872, BW=54.5MiB/s (57.2MB/s)(1635MiB/30001msec) |

bs.write/15:38:07.128k.log | write: IOPS=633, BW=79.2MiB/s (83.1MB/s)(2377MiB/30001msec) |

bs.write/15:38:48.256k.log | write: IOPS=419, BW=105MiB/s (110MB/s)(3148MiB/30011msec) |

bs.write/15:39:28.512k.log | write: IOPS=244, BW=122MiB/s (128MB/s)(3662MiB/30004msec) |

bs.write/15:40:09.1m.log | write: IOPS=150, BW=150MiB/s (157MB/s)(4505MiB/30004msec) |

bs.write/15:40:49.2m.log | write: IOPS=75, BW=151MiB/s (158MB/s)(4560MiB/30253msec) |

bs.write/15:41:30.4m.log | write: IOPS=38, BW=152MiB/s (160MB/s)(4652MiB/30509msec) |

bs.write/15:42:11.8m.log | write: IOPS=19, BW=153MiB/s (160MB/s)(4656MiB/30506msec) |

bs.write/15:42:52.16m.log | write: IOPS=9, BW=153MiB/s (160MB/s)(4656MiB/30498msec) |

bs.write/15:43:33.32m.log | write: IOPS=4, BW=153MiB/s (160MB/s)(4672MiB/30553msec) |

bs.write/15:44:14.64m.log | write: IOPS=2, BW=153MiB/s (161MB/s)(4672MiB/30479msec) |

测试结果统计图(BW和IOPS):

测试结果统计图(BW和磁盘利用率):

折线图数据取出命令:

结论:

待天翼云环境上正式测试后总结。

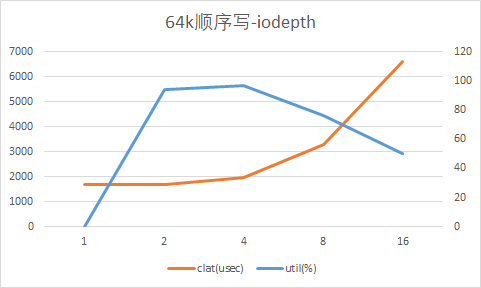

1.3 iodepth

测试目的:

观察不同iodepth对磁盘读写的影响

测试内容:

在随机读写和顺序读写情况下,iodepth不同结果的变化。

测试脚本:

测试命令:

测试结果取出命令:

测试结果:

文件名/测试iodepth数 | IOPS/BW | 磁盘利用率 |

iodepth.4k_randread/09:06:47.1.log | read: IOPS=1555, BW=6224KiB/s (6373kB/s)(182MiB/30001msec) | vda: ios=46521/28, merge=0/30, ticks=29092/31, in_queue=80, util=0.23% |

iodepth.4k_randread/09:07:27.2.log | read: IOPS=3403, BW=13.3MiB/s (13.9MB/s)(399MiB/30001msec) | vda: ios=101786/32, merge=0/27, ticks=58157/11, in_queue=29614, util=98.71% |

iodepth.4k_randread/09:08:08.4.log | read: IOPS=5030, BW=19.7MiB/s (20.6MB/s)(590MiB/30016msec) | vda: ios=150306/32, merge=0/36, ticks=118129/25, in_queue=84237, util=93.88% |

iodepth.4k_randread/09:08:48.8.log | read: IOPS=5107, BW=19.0MiB/s (20.9MB/s)(606MiB/30371msec) | vda: ios=155108/15, merge=0/35, ticks=236980/1966, in_queue=129021, util=60.22% |

iodepth.4k_randread/09:09:29.16.log | read: IOPS=5082, BW=19.9MiB/s (20.8MB/s)(606MiB/30524msec) | vda: ios=155183/34, merge=0/40, ticks=467845/11787, in_queue=149224, util=32.35% |

iodepth.4k_randwrite/09:12:11.1.log | write: IOPS=897, BW=3589KiB/s (3675kB/s)(105MiB/30009msec) | vda: ios=0/26854, merge=0/40, ticks=0/29322, in_queue=23, util=0.05% |

iodepth.4k_randwrite/09:12:52.2.log | write: IOPS=1852, BW=7409KiB/s (7586kB/s)(217MiB/30001msec) | vda: ios=0/55344, merge=0/33, ticks=0/58780, in_queue=29687, util=98.94% |

iodepth.4k_randwrite/09:13:32.4.log | write: IOPS=3767, BW=14.7MiB/s (15.4MB/s)(442MiB/30002msec) | vda: ios=0/112748, merge=0/32, ticks=0/118043, in_queue=88933, util=99.09% |

iodepth.4k_randwrite/09:14:13.8.log | write: IOPS=5018, BW=19.6MiB/s (20.6MB/s)(588MiB/30002msec) | vda: ios=1/150121, merge=0/36, ticks=117/234758, in_queue=153031, util=73.13% |

iodepth.4k_randwrite/09:14:53.16.log | write: IOPS=5089, BW=19.9MiB/s (20.8MB/s)(606MiB/30486msec) | vda: ios=0/155150, merge=0/42, ticks=0/475526, in_queue=187069, util=41.02% |

iodepth.64k_read/09:22:22.1.log | read: IOPS=2301, BW=144MiB/s (151MB/s)(4316MiB/30001msec) | vda: ios=68781/35, merge=0/27, ticks=28957/35, in_queue=39, util=0.05% |

iodepth.64k_read/09:23:03.2.log | read: IOPS=2399, BW=150MiB/s (157MB/s)(4505MiB/30037msec) | vda: ios=72134/14, merge=0/43, ticks=57789/402, in_queue=22511, util=72.83% |

iodepth.64k_read/09:23:43.4.log | read: IOPS=2438, BW=152MiB/s (160MB/s)(4650MiB/30513msec) | vda: ios=74394/23, merge=0/33, ticks=117517/8177, in_queue=50791, util=53.24% |

iodepth.64k_read/09:24:24.8.log | read: IOPS=2439, BW=152MiB/s (160MB/s)(4650MiB/30503msec) | vda: ios=74395/30, merge=0/25, ticks=236077/14064, in_queue=86742, util=39.30% |

iodepth.64k_read/09:25:05.16.log | read: IOPS=2437, BW=152MiB/s (160MB/s)(4650MiB/30523msec) | vda: ios=74391/38, merge=0/39, ticks=471465/18968, in_queue=165040, util=35.67% |

iodepth.64k_write/09:18:25.1.log | write: IOPS=581, BW=36.3MiB/s (38.1MB/s)(1090MiB/30001msec) | vda: ios=3/17401, merge=0/43, ticks=0/29470, in_queue=44, util=0.10% |

iodepth.64k_write/09:19:05.2.log | write: IOPS=1166, BW=72.9MiB/s (76.5MB/s)(2188MiB/30002msec) | vda: ios=0/34944, merge=0/43, ticks=0/58604, in_queue=28252, util=94.06% |

iodepth.64k_write/09:19:46.4.log | write: IOPS=2034, BW=127MiB/s (133MB/s)(3816MiB/30002msec) | vda: ios=2/60874, merge=0/46, ticks=65/115950, in_queue=86781, util=97.09% |

iodepth.64k_write/09:20:26.8.log | write: IOPS=2427, BW=152MiB/s (159MB/s)(4553MiB/30002msec) | vda: ios=1/72518, merge=0/42, ticks=72/235636, in_queue=158374, util=76.09% |

iodepth.64k_write/09:21:07.16.log | write: IOPS=2408, BW=151MiB/s (158MB/s)(4537MiB/30135msec) | vda: ios=12/72605, merge=0/56, ticks=137/470607, in_queue=223307, util=50.05% |

lat是总延迟,slat是提交io到内核的延迟,clat是内核到磁盘完成之间的延迟,因此lat=slat+clat。slat通常是比较稳定的值,所以这里通过观察clat来判断IO延迟情况。

测试结果统计图:

4k随机读:(IOPS、带宽、延迟、磁盘利用率)

4k随机写:(IOPS、带宽、延迟、磁盘利用率)

64k顺序读:(IOPS、带宽、延迟、磁盘利用率)

64k顺序写:(IOPS、带宽、延迟、磁盘利用率)

结论:

1.4 numjobs

测试目的:

测试不同numjobs对磁盘读写性能的影响。

测试内容:

测试随机读写和顺序读写不同numjobs对磁盘各项数据的影响。

测试脚本:

测试命令:

测试结果:

结果中包含 “IOPS” “BW” “磁盘利用率”等

测试结果统计图:

4k随机读:(IOPS、带宽、延迟、磁盘利用率):

4k随机写:(IOPS、带宽、延迟、磁盘利用率):

64k顺序读:(IOPS、带宽、延迟、磁盘利用率):

64k顺序写:(IOPS、带宽、延迟、磁盘利用率):

结论:

总结

附:

FIO参数

- Author:共倒金荷家万里

- URL:https://tangly1024.com/article/11140ef0-3786-8096-acfc-de36396b784a

- Copyright:All articles in this blog, except for special statements, adopt BY-NC-SA agreement. Please indicate the source!

Relate Posts